Reinforcement ______ the Likelihood of a Behavior Reoccurring Again in the Future.

Conditioning and Learning

ByUniversity of Vermont

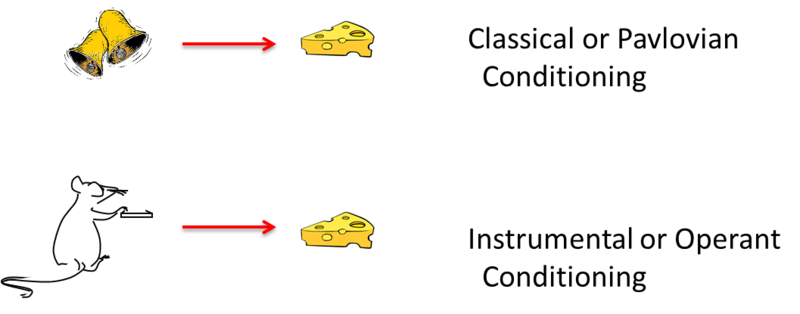

Bones principles of learning are always operating and always influencing homo behavior. This module discusses the 2 most fundamental forms of learning -- classical (Pavlovian) and instrumental (operant) workout. Through them, nosotros respectively learn to associate 1) stimuli in the environment, or two) our own behaviors, with pregnant events, such equally rewards and punishments. The ii types of learning have been intensively studied because they have powerful effects on behavior, and because they provide methods that permit scientists to analyze learning processes rigorously. This module describes some of the most important things you need to know about classical and instrumental conditioning, and it illustrates some of the many ways they help u.s. understand normal and disordered beliefs in humans. The module concludes by introducing the concept of observational learning, which is a form of learning that is largely singled-out from classical and operant conditioning.

Learning Objectives

- Distinguish between classical (Pavlovian) workout and instrumental (operant) conditioning.

- Understand some important facts about each that tell u.s. how they piece of work.

- Understand how they work separately and together to influence human beliefs in the world exterior the laboratory.

- Students volition be able to list the 4 aspects of observational learning co-ordinate to Social Learning Theory.

Two Types of Conditioning

Although Ivan Pavlov won a Nobel Prize for studying digestion, he is much more famous for something else: working with a dog, a bell, and a bowl of saliva. Many people are familiar with the archetype study of "Pavlov'south dog," only rarely do they understand the significance of its discovery. In fact, Pavlov's work helps explain why some people go broken-hearted only looking at a crowded bus, why the sound of a morning alert is then hated, and even why we swear off certain foods we've but tried once. Classical (or Pavlovian) conditioning is one of the central means nosotros learn about the world around united states of america. But it is far more just a theory of learning; it is also arguably a theory of identity. For, once you understand classical conditioning, you'll recognize that your favorite music, dress, even political candidate, might all be a outcome of the same process that makes a domestic dog drool at the sound of bell.

Around the turn of the 20th century, scientists who were interested in understanding the behavior of animals and humans began to appreciate the importance of two very basic forms of learning. One, which was start studied by the Russian physiologist Ivan Pavlov, is known as classical, or Pavlovian conditioning. In his famous experiment, Pavlov rang a bell and then gave a domestic dog some food. Later repeating this pairing multiple times, the domestic dog eventually treated the bong as a signal for food, and began salivating in anticipation of the care for. This kind of result has been reproduced in the lab using a broad range of signals (e.yard., tones, light, tastes, settings) paired with many different events besides nutrient (e.g., drugs, shocks, illness; meet beneath).

Nosotros now believe that this same learning procedure is engaged, for example, when humans associate a drug they've taken with the surround in which they've taken it; when they associate a stimulus (e.g., a symbol for vacation, like a big beach towel) with an emotional event (similar a burst of happiness); and when they associate the flavour of a nutrient with getting food poisoning. Although classical conditioning may seem "former" or "too unproblematic" a theory, information technology is still widely studied today for at least two reasons: First, information technology is a straightforward examination of associative learning that can exist used to written report other, more than complex behaviors. Second, because classical conditioning is e'er occurring in our lives, its furnishings on behavior have of import implications for agreement normal and matted behavior in humans.

In a full general mode, classical conditioning occurs whenever neutral stimuli are associated with psychologically meaning events. With food poisoning, for instance, although having fish for dinner may not normally be something to be concerned about (i.e., a "neutral stimuli"), if it causes you to get ill, you volition at present likely associate that neutral stimuli (the fish) with the psychologically pregnant event of getting ill. These paired events are often described using terms that tin exist applied to whatever situation.

The dog food in Pavlov's experiment is chosen the unconditioned stimulus (U.s.a.) because it elicits an unconditioned response (UR). That is, without whatever kind of "preparation" or "didactics," the stimulus produces a natural or instinctual reaction. In Pavlov's case, the nutrient (US) automatically makes the domestic dog drool (UR). Other examples of unconditioned stimuli include loud noises (US) that startle us (UR), or a hot shower (U.s.a.) that produces pleasure (UR).

On the other paw, a conditioned stimulus produces a conditioned response. A conditioned stimulus (CS) is a bespeak that has no importance to the organism until it is paired with something that does have importance. For example, in Pavlov'south experiment, the bell is the conditioned stimulus. Before the domestic dog has learned to associate the bell (CS) with the presence of food (United states), hearing the bong means nothing to the dog. Withal, after multiple pairings of the bell with the presentation of food, the dog starts to drool at the sound of the bell. This drooling in response to the bell is the conditioned response (CR). Although it tin can exist confusing, the conditioned response is near ever the same as the unconditioned response. However, it is called the conditioned response because it is conditional on (or, depends on) being paired with the conditioned stimulus (e.g., the bell). To help make this clearer, consider becoming really hungry when you encounter the logo for a fast nutrient eating house. There's a good chance yous'll starting time salivating. Although it is the bodily eating of the food (U.s.) that normally produces the salivation (UR), just seeing the eatery's logo (CS) can trigger the same reaction (CR).

Some other example you are probably very familiar with involves your alarm clock. If you're similar almost people, waking up early usually makes you unhappy. In this case, waking up early (The states) produces a natural sensation of grumpiness (UR). Rather than waking up early on your own, though, you probable have an alarm clock that plays a tone to wake you. Before setting your warning to that particular tone, permit'southward imagine y'all had neutral feelings about information technology (i.eastward., the tone had no prior meaning for you). However, at present that you use it to wake up every morning, you psychologically "pair" that tone (CS) with your feelings of grumpiness in the morning (UR). After plenty pairings, this tone (CS) will automatically produce your natural response of grumpiness (CR). Thus, this linkage between the unconditioned stimulus (US; waking upwardly early on) and the conditioned stimulus (CS; the tone) is then stiff that the unconditioned response (UR; being grumpy) will become a conditioned response (CR; e.thousand., hearing the tone at whatsoever betoken in the solar day—whether waking up or walking down the street—will make you grumpy). Modern studies of classical conditioning utilise a very wide range of CSs and USs and measure a wide range of conditioned responses.

Although classical workout is a powerful explanation for how we learn many dissimilar things, there is a 2nd grade of conditioning that also helps explain how we acquire. First studied by Edward Thorndike, and later extended by B. F. Skinner, this second type of conditioning is known every bit instrumental or operant conditioning. Operant conditioning occurs when a behavior (every bit opposed to a stimulus) is associated with the occurrence of a meaning event. In the best-known case, a rat in a laboratory learns to press a lever in a cage (called a "Skinner box") to receive food. Because the rat has no "natural" association between pressing a lever and getting food, the rat has to larn this connection. At get-go, the rat may simply explore its cage, climbing on superlative of things, burrowing under things, in search of food. Eventually while poking around its cage, the rat accidentally presses the lever, and a nutrient pellet drops in. This voluntary behavior is called an operant behavior, because information technology "operates" on the environs (i.e., it is an activity that the animal itself makes).

At present, once the rat recognizes that information technology receives a piece of nutrient every time information technology presses the lever, the behavior of lever-pressing becomes reinforced. That is, the food pellets serve as reinforcers considering they strengthen the rat's want to appoint with the surround in this particular manner. In a parallel case, imagine that you lot're playing a street-racing video game. Every bit you drive through ane urban center course multiple times, yous attempt a number of dissimilar streets to get to the stop line. On i of these trials, you discover a shortcut that dramatically improves your overall time. Yous have learned this new path through operant workout. That is, by engaging with your environment (operant responses), you performed a sequence of behaviors that that was positively reinforced (i.e., you found the shortest distance to the finish line). And now that you've learned how to drive this grade, you will perform that same sequence of driving behaviors (only as the rat presses on the lever) to receive your advantage of a faster finish.

Operant workout inquiry studies how the effects of a behavior influence the probability that it will occur over again. For example, the effects of the rat'due south lever-pressing beliefs (i.e., receiving a food pellet) influences the probability that it will go along pressing the lever. For, according to Thorndike'due south law of outcome, when a behavior has a positive (satisfying) effect or result, it is probable to be repeated in the futurity. Yet, when a behavior has a negative (painful/annoying) result, information technology is less likely to be repeated in the futurity. Furnishings that increase behaviors are referred to equally reinforcers, and effects that decrease them are referred to as punishers.

An everyday example that helps to illustrate operant workout is striving for a good grade in course—which could be considered a reward for students (i.e., it produces a positive emotional response). In order to get that advantage (like to the rat learning to printing the lever), the pupil needs to alter his/her behavior. For example, the student may acquire that speaking upward in course gets him/her participation points (a reinforcer), then the educatee speaks up repeatedly. However, the student also learns that south/he shouldn't speak upwards about just anything; talking about topics unrelated to schoolhouse really costs points. Therefore, through the student's freely chosen behaviors, s/he learns which behaviors are reinforced and which are punished.

An important distinction of operant conditioning is that it provides a method for studying how consequences influence "voluntary" behavior. The rat'southward conclusion to press the lever is voluntary, in the sense that the rat is free to make and repeat that response whenever it wants. Classical conditioning, on the other manus, is just the opposite—depending instead on "involuntary" beliefs (due east.grand., the dog doesn't choose to drool; information technology just does). And so, whereas the rat must actively participate and perform some kind of behavior to attain its reward, the dog in Pavlov's experiment is a passive participant. 1 of the lessons of operant conditioning research, then, is that voluntary beliefs is strongly influenced by its consequences.

The analogy above summarizes the basic elements of classical and instrumental workout. The two types of learning differ in many ways. However, modern thinkers often emphasize the fact that they differ—as illustrated here—in what is learned. In classical conditioning, the brute behaves as if information technology has learned to acquaintance a stimulus with a significant event. In operant conditioning, the animal behaves as if it has learned to associate a behavior with a significant event. Some other difference is that the response in the classical situation (e.1000., salivation) is elicited by a stimulus that comes earlier it, whereas the response in the operant case is non elicited past any detail stimulus. Instead, operant responses are said to exist emitted. The discussion "emitted" further conveys the idea that operant behaviors are essentially voluntary in nature.

Understanding classical and operant conditioning provides psychologists with many tools for understanding learning and behavior in the earth exterior the lab. This is in part because the two types of learning occur continuously throughout our lives. It has been said that "much like the laws of gravity, the laws of learning are ever in event" (Spreat & Spreat, 1982).

Useful Things to Know near Classical Conditioning

Classical Workout Has Many Effects on Behavior

A classical CS (eastward.g., the bell) does not merely elicit a simple, unitary reflex. Pavlov emphasized salivation because that was the only response he measured. But his bell almost certainly elicited a whole arrangement of responses that functioned to get the organism set up for the upcoming US (food) (see Timberlake, 2001). For case, in add-on to salivation, CSs (such as the bell) that signal that food is virtually also elicit the secretion of gastric acrid, pancreatic enzymes, and insulin (which gets blood glucose into cells). All of these responses set the body for digestion. Additionally, the CS elicits arroyo behavior and a state of excitement. And presenting a CS for food tin too cause animals whose stomachs are total to consume more nutrient if it is available. In fact, food CSs are so prevalent in mod social club, humans are also inclined to eat or feel hungry in response to cues associated with food, such every bit the audio of a pocketbook of potato chips opening, the sight of a well-known logo (east.g., Coca-Cola), or the feel of the couch in front of the television.

Classical conditioning is too involved in other aspects of eating. Flavors associated with sure nutrients (such every bit sugar or fat) can become preferred without arousing whatsoever awareness of the pairing. For case, protein is a United states that your torso automatically craves more of once you starting time to consume it (UR): since proteins are highly concentrated in meat, the flavour of meat becomes a CS (or cue, that proteins are on the manner), which perpetuates the wheel of peckish for however more than meat (this automated bodily reaction now a CR).

In a similar manner, flavors associated with tum hurting or illness get avoided and disliked. For instance, a person who gets sick after drinking as well much tequila may acquire a profound dislike of the taste and odor of tequila—a phenomenon called taste aversion conditioning. The fact that flavors are frequently associated with so many consequences of eating is important for animals (including rats and humans) that are frequently exposed to new foods. And it is clinically relevant. For example, drugs used in chemotherapy often make cancer patients sick. Equally a event, patients often acquire aversions to foods eaten just before handling, or even aversions to such things every bit the waiting room of the chemotherapy dispensary itself (see Bernstein, 1991; Scalera & Bavieri, 2009).

Classical workout occurs with a multifariousness of significant events. If an experimenter sounds a tone just before applying a mild shock to a rat's feet, the tone will elicit fearfulness or feet after one or 2 pairings. Similar fright workout plays a role in creating many anxiety disorders in humans, such as phobias and panic disorders, where people acquaintance cues (such as closed spaces, or a shopping mall) with panic or other emotional trauma (see Mineka & Zinbarg, 2006). Here, rather than a physical response (like drooling), the CS triggers an emotion.

Another interesting effect of classical conditioning tin can occur when we ingest drugs. That is, when a drug is taken, information technology tin be associated with the cues that are present at the same time (due east.yard., rooms, odors, drug paraphernalia). In this regard, if someone associates a particular smell with the sensation induced by the drug, whenever that person smells the same aroma afterward, it may cue responses (physical and/or emotional) related to taking the drug itself. But drug cues have an even more interesting property: They elicit responses that often "compensate" for the upcoming outcome of the drug (see Siegel, 1989). For example, morphine itself suppresses hurting; however, if someone is used to taking morphine, a cue that signals the "drug is coming shortly" can really make the person more sensitive to pain. Because the person knows a pain suppressant volition soon be administered, the body becomes more sensitive, anticipating that "the drug volition soon accept intendance of it." Remarkably, such conditioned compensatory responses in turn decrease the impact of the drug on the torso—because the body has go more sensitive to pain.

This conditioned compensatory response has many implications. For instance, a drug user volition be about "tolerant" to the drug in the presence of cues that take been associated with information technology (considering such cues elicit compensatory responses). As a consequence, overdose is usually not due to an increment in dosage, just to taking the drug in a new identify without the familiar cues—which would have otherwise immune the user to tolerate the drug (run across Siegel, Hinson, Krank, & McCully, 1982). Conditioned compensatory responses (which include heightened hurting sensitivity and decreased trunk temperature, among others) might besides cause discomfort, thus motivating the drug user to keep usage of the drug to reduce them. This is one of several ways classical conditioning might exist a gene in drug addiction and dependence.

A concluding effect of classical cues is that they motivate ongoing operant behavior (meet Balleine, 2005). For instance, if a rat has learned via operant workout that pressing a lever will give information technology a drug, in the presence of cues that signal the "drug is coming soon" (like the audio of the lever squeaking), the rat will work harder to printing the lever than if those cues weren't present (i.e., there is no squeaking lever sound). Similarly, in the presence of food-associated cues (east.g., smells), a rat (or an overeater) will piece of work harder for food. And finally, even in the presence of negative cues (like something that signals fear), a rat, a homo, or any other organism will work harder to avert those situations that might lead to trauma. Classical CSs thus accept many effects that tin can contribute to significant behavioral phenomena.

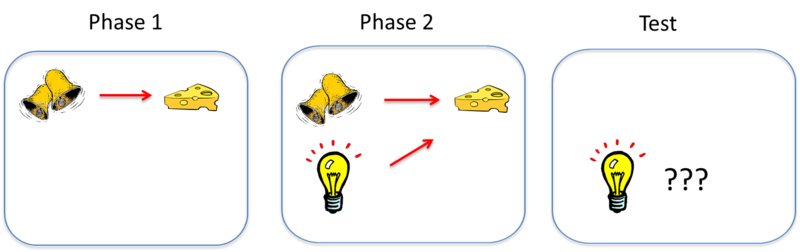

The Learning Process

Equally mentioned earlier, classical conditioning provides a method for studying basic learning processes. Somewhat counterintuitively, though, studies testify that pairing a CS and a US together is not sufficient for an association to be learned between them. Consider an issue called blocking (see Kamin, 1969). In this effect, an brute get-go learns to acquaintance one CS—call it stimulus A—with a U.s.. In the illustration above, the sound of a bell (stimulus A) is paired with the presentation of food. Once this association is learned, in a second phase, a second stimulus—stimulus B—is presented alongside stimulus A, such that the two stimuli are paired with the The states together. In the analogy, a light is added and turned on at the same time the bell is rung. Withal, because the animal has already learned the clan between stimulus A (the bell) and the food, the animal doesn't acquire an association between stimulus B (the lite) and the food. That is, the conditioned response only occurs during the presentation of stimulus A, because the earlier conditioning of A "blocks" the conditioning of B when B is added to A. The reason? Stimulus A already predicts the United states of america, so the US is non surprising when it occurs with Stimulus B.

Learning depends on such a surprise, or a discrepancy between what occurs on a conditioning trial and what is already predicted by cues that are present on the trial. To learn something through classical conditioning, there must first be some prediction error, or the chance that a conditioned stimulus won't lead to the expected outcome. With the example of the bong and the calorie-free, because the bell always leads to the reward of nutrient, in that location'south no "prediction mistake" that the addition of the light helps to correct. However, if the researcher suddenly requires that the bell and the light both occur in gild to receive the nutrient, the bong alone will produce a prediction error that the brute has to learn.

Blocking and other related effects point that the learning process tends to take in the about valid predictors of significant events and ignore the less useful ones. This is common in the real world. For case, imagine that your supermarket puts big star-shaped stickers on products that are on sale. Quickly, you lot learn that items with the big star-shaped stickers are cheaper. Nevertheless, imagine you get into a like supermarket that not but uses these stickers, but as well uses brilliant orange toll tags to denote a discount. Because of blocking (i.e., you already know that the star-shaped stickers betoken a discount), you don't take to learn the colour arrangement, too. The star-shaped stickers tell you everything yous need to know (i.e. in that location's no prediction error for the discount), and thus the colour system is irrelevant.

Classical conditioning is strongest if the CS and United states are intense or salient. Information technology is also best if the CS and The states are relatively new and the organism hasn't been frequently exposed to them before. And it is particularly strong if the organism's biology has prepared it to associate a particular CS and The states. For example, rats and humans are naturally inclined to associate an illness with a season, rather than with a light or tone. Considering foods are most commonly experienced by taste, if there is a particular food that makes us ill, associating the flavor (rather than the appearance—which may be like to other foods) with the disease will more greatly ensure we avoid that food in the time to come, and thus avoid getting sick. This sorting tendency, which is prepare by evolution, is chosen preparedness.

In that location are many factors that impact the strength of classical conditioning, and these have been the subject field of much enquiry and theory (see Rescorla & Wagner, 1972; Pearce & Bouton, 2001). Behavioral neuroscientists take as well used classical conditioning to investigate many of the basic encephalon processes that are involved in learning (see Fanselow & Poulos, 2005; Thompson & Steinmetz, 2009).

Erasing Classical Learning

After workout, the response to the CS can be eliminated if the CS is presented repeatedly without the US. This effect is called extinction, and the response is said to become "extinguished." For example, if Pavlov kept ringing the bell but never gave the dog any nutrient afterward, eventually the dog'south CR (drooling) would no longer happen when it heard the CS (the bell), because the bell would no longer exist a predictor of nutrient. Extinction is important for many reasons. For 1 matter, it is the basis for many therapies that clinical psychologists use to eliminate maladaptive and unwanted behaviors. Take the case of a person who has a debilitating fear of spiders: one arroyo might include systematic exposure to spiders. Whereas, initially the person has a CR (due east.g., extreme fright) every time due south/he sees the CS (e.g., the spider), after repeatedly being shown pictures of spiders in neutral conditions, pretty presently the CS no longer predicts the CR (i.e., the person doesn't accept the fear reaction when seeing spiders, having learned that spiders no longer serve as a "cue" for that fear). Here, repeated exposure to spiders without an aversive event causes extinction.

Psychologists must have 1 important fact virtually extinction, however: information technology does not necessarily destroy the original learning (meet Bouton, 2004). For example, imagine you lot strongly associate the aroma of chalkboards with the agony of centre schoolhouse detention. Now imagine that, afterward years of encountering chalkboards, the smell of them no longer recalls the agony of detention (an example of extinction). However, 1 day, afterwards entering a new building for the first time, you lot suddenly grab a whiff of a chalkboard and WHAM!, the desperation of detention returns. This is chosen spontaneous recovery: following a lapse in exposure to the CS later on extinction has occurred, sometimes re-exposure to the CS (e.m., the smell of chalkboards) can evoke the CR again (e.thousand., the agony of detention).

Some other related phenomenon is the renewal effect: Subsequently extinction, if the CS is tested in a new context, such as a different room or location, the CR can besides render. In the chalkboard case, the action of inbound a new building—where you don't expect to olfactory property chalkboards—of a sudden renews the sensations associated with detention. These effects have been interpreted to advise that extinction inhibits rather than erases the learned behavior, and this inhibition is mainly expressed in the context in which it is learned (see "context" in the Key Vocabulary section beneath).

This does not hateful that extinction is a bad treatment for behavior disorders. Instead, clinicians tin increment its effectiveness by using basic enquiry on learning to assistance defeat these relapse effects (see Craske et al., 2008). For example, conducting extinction therapies in contexts where patients might be most vulnerable to relapsing (eastward.g., at piece of work), might be a expert strategy for enhancing the therapy's success.

Useful Things to Know about Instrumental Conditioning

Most of the things that affect the strength of classical conditioning also impact the strength of instrumental learning—whereby we learn to acquaintance our actions with their outcomes. As noted earlier, the "bigger" the reinforcer (or punisher), the stronger the learning. And, if an instrumental behavior is no longer reinforced, it will also exist extinguished. Almost of the rules of associative learning that apply to classical workout also use to instrumental learning, but other facts about instrumental learning are likewise worth knowing.

Instrumental Responses Come Nether Stimulus Control

Every bit you know, the classic operant response in the laboratory is lever-pressing in rats, reinforced by nutrient. Nevertheless, things tin exist arranged and then that lever-pressing only produces pellets when a particular stimulus is present. For example, lever-pressing can be reinforced only when a light in the Skinner box is turned on; when the low-cal is off, no food is released from lever-pressing. The rat presently learns to discriminate betwixt the light-on and light-off weather, and presses the lever only in the presence of the light (responses in low-cal-off are extinguished). In everyday life, recollect about waiting in the turn lane at a traffic low-cal. Although you know that light-green means go, only when you take the green arrow practice you turn. In this regard, the operant behavior is now said to be under stimulus control. And, as is the case with the traffic light, in the real world, stimulus command is probably the rule.

The stimulus controlling the operant response is called a discriminative stimulus. Information technology tin can be associated straight with the response, or the reinforcer (see below). However, it usually does not elicit the response the mode a classical CS does. Instead, it is said to "set the occasion for" the operant response. For example, a canvass put in forepart of an creative person does non elicit painting behavior or hogtie her to paint. It allows, or sets the occasion for, painting to occur.

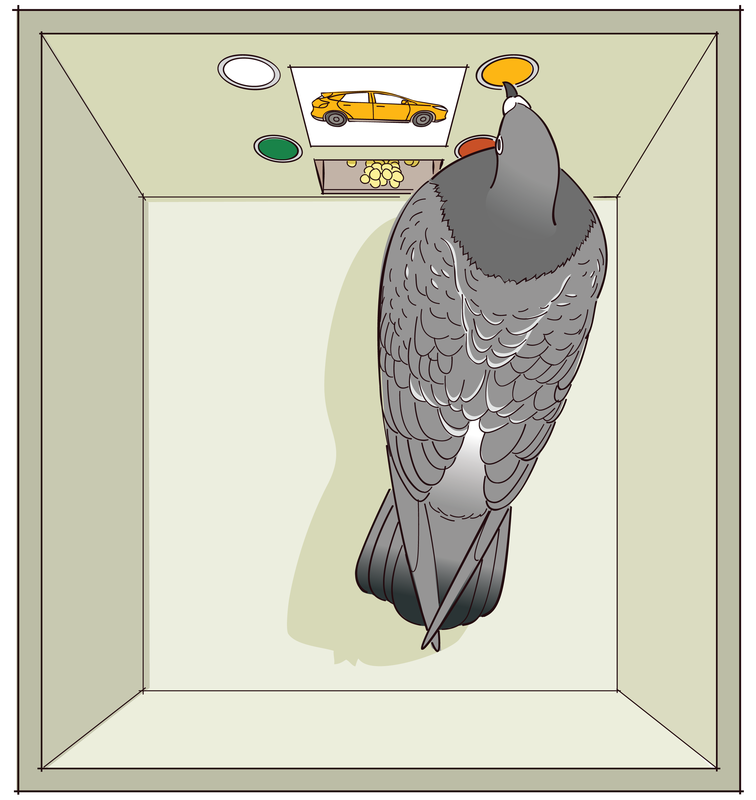

Stimulus-control techniques are widely used in the laboratory to study perception and other psychological processes in animals. For instance, the rat would not exist able to respond accordingly to light-on and light-off weather condition if it could non see the light. Post-obit this logic, experiments using stimulus-command methods have tested how well animals see colors, hear ultrasounds, and discover magnetic fields. That is, researchers pair these discriminative stimuli with those they know the animals already empathize (such as pressing the lever). In this way, the researchers tin test if the animals can larn to press the lever simply when an ultrasound is played, for example.

These methods tin can likewise be used to report "higher" cognitive processes. For example, pigeons tin larn to peck at dissimilar buttons in a Skinner box when pictures of flowers, cars, chairs, or people are shown on a miniature TV screen (come across Wasserman, 1995). Pecking button i (and no other) is reinforced in the presence of a bloom prototype, push 2 in the presence of a chair image, and then on. Pigeons can learn the discrimination readily, and, nether the right weather condition, will even peck the right buttons associated with pictures of new flowers, cars, chairs, and people they have never seen before. The birds have learned to categorize the sets of stimuli. Stimulus-control methods can exist used to study how such categorization is learned.

Operant Workout Involves Choice

Another thing to know about operant conditioning is that the response e'er requires choosing i beliefs over others. The pupil who goes to the bar on Thursday night chooses to drinkable instead of staying at dwelling and studying. The rat chooses to press the lever instead of sleeping or scratching its ear in the dorsum of the box. The alternative behaviors are each associated with their own reinforcers. And the tendency to perform a particular action depends on both the reinforcers earned for it and the reinforcers earned for its alternatives.

To investigate this idea, choice has been studied in the Skinner box past making two levers available for the rat (or two buttons available for the dove), each of which has its own reinforcement or payoff charge per unit. A thorough study of pick in situations like this has led to a dominion called the quantitative law of effect (see Herrnstein, 1970), which can be understood without going into quantitative detail: The law acknowledges the fact that the furnishings of reinforcing one behavior depend crucially on how much reinforcement is earned for the behavior's alternatives. For case, if a dove learns that pecking ane light will reward ii nutrient pellets, whereas the other low-cal but rewards one, the pigeon will only peck the starting time lite. Notwithstanding, what happens if the first light is more strenuous to accomplish than the 2nd ane? Volition the cost of energy outweigh the bonus of food? Or will the extra food exist worth the work? In general, a given reinforcer will be less reinforcing if there are many alternative reinforcers in the environment. For this reason, alcohol, sexual activity, or drugs may be less powerful reinforcers if the person'south environment is full of other sources of reinforcement, such as achievement at work or love from family members.

Cognition in Instrumental Learning

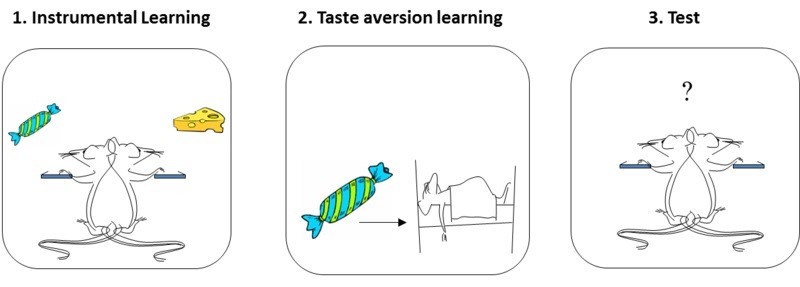

Modern inquiry also indicates that reinforcers do more merely strengthen or "postage stamp in" the behaviors they are a consequence of, as was Thorndike's original view. Instead, animals learn about the specific consequences of each beliefs, and volition perform a beliefs depending on how much they currently want—or "value"—its consequence.

This idea is best illustrated by a phenomenon called the reinforcer devaluation effect (see Colwill & Rescorla, 1986). A rat is commencement trained to perform two instrumental actions (e.thou., pressing a lever on the left, and on the right), each paired with a different reinforcer (eastward.grand., a sweetness sucrose solution, and a nutrient pellet). At the end of this training, the rat tends to press both levers, alternating between the sucrose solution and the nutrient pellet. In a second phase, ane of the reinforcers (east.thousand., the sucrose) is then separately paired with affliction. This weather a taste disfavor to the sucrose. In a final test, the rat is returned to the Skinner box and allowed to printing either lever freely. No reinforcers are presented during this test (i.e., no sucrose or food comes from pressing the levers), then behavior during testing tin can only result from the rat'southward memory of what information technology has learned earlier. Importantly here, the rat chooses not to perform the response that one time produced the reinforcer that it now has an aversion to (e.k., it won't printing the sucrose lever). This means that the rat has learned and remembered the reinforcer associated with each response, and tin can combine that knowledge with the knowledge that the reinforcer is now "bad." Reinforcers do non only stamp in responses; the animal learns much more than than that. The behavior is said to be "goal-directed" (see Dickinson & Balleine, 1994), because information technology is influenced by the current value of its associated goal (i.e., how much the rat wants/doesn't want the reinforcer).

Things can get more complicated, however, if the rat performs the instrumental deportment ofttimes and repeatedly. That is, if the rat has spent many months learning the value of pressing each of the levers, the human action of pressing them becomes automated and routine. And here, this once goal-directed action (i.e., the rat pressing the lever for the goal of getting sucrose/food) can become a habit. Thus, if a rat spends many months performing the lever-pressing behavior (turning such behavior into a addiction), even when sucrose is again paired with disease, the rat will go along to press that lever (see Holland, 2004). Later all the practise, the instrumental response (pressing the lever) is no longer sensitive to reinforcer devaluation. The rat continues to answer automatically, regardless of the fact that the sucrose from this lever makes information technology sick.

Habits are very common in human being experience, and tin can be useful. You do not need to relearn each twenty-four hours how to make your coffee in the morning or how to brush your teeth. Instrumental behaviors tin can eventually get habitual, letting us get the task done while beingness complimentary to think about other things.

Putting Classical and Instrumental Conditioning Together

Classical and operant workout are normally studied separately. But exterior of the laboratory they almost always occur at the same time. For example, a person who is reinforced for drinking alcohol or eating excessively learns these behaviors in the presence of certain stimuli—a pub, a gear up of friends, a eatery, or maybe the couch in front end of the TV. These stimuli are also available for association with the reinforcer. In this way, classical and operant conditioning are always intertwined.

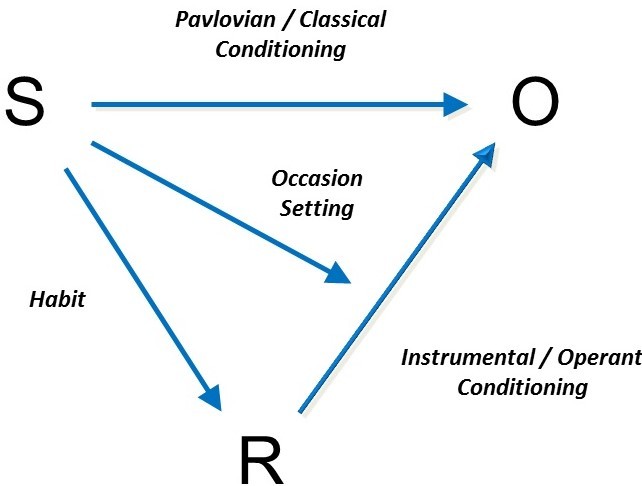

The figure beneath summarizes this thought, and helps review what nosotros accept discussed in this module. Generally speaking, any reinforced or punished operant response (R) is paired with an outcome (O) in the presence of some stimulus or set of stimuli (South).

The figure illustrates the types of associations that tin be learned in this very general scenario. For 1 thing, the organism will learn to associate the response and the outcome (R – O). This is instrumental workout. The learning process hither is probably similar to classical conditioning, with all its accent on surprise and prediction mistake. And, equally we discussed while because the reinforcer devaluation event, once R – O is learned, the organism volition be ready to perform the response if the outcome is desired or valued. The value of the reinforcer can also be influenced past other reinforcers earned for other behaviors in the state of affairs. These factors are at the eye of instrumental learning.

2d, the organism can also learn to associate the stimulus with the reinforcing outcome (S – O). This is the classical workout component, and equally we have seen, it tin can accept many consequences on behavior. For one matter, the stimulus volition come to evoke a organisation of responses that help the organism prepare for the reinforcer (not shown in the figure): The drinker may undergo changes in trunk temperature; the eater may salivate and accept an increment in insulin secretion. In addition, the stimulus will evoke arroyo (if the effect is positive) or retreat (if the outcome is negative). Presenting the stimulus will as well prompt the instrumental response.

The third clan in the diagram is the one betwixt the stimulus and the response (S – R). Every bit discussed earlier, after a lot of exercise, the stimulus may begin to elicit the response straight. This is habit learning, whereby the response occurs relatively automatically, without much mental processing of the relation between the action and the outcome and the outcome's current value.

The final link in the figure is between the stimulus and the response-issue association [South – (R – O)]. More than than just entering into a unproblematic association with the R or the O, the stimulus tin can point that the R – O relationship is now in effect. This is what we mean when nosotros say that the stimulus tin can "set the occasion" for the operant response: It sets the occasion for the response-reinforcer relationship. Through this machinery, the painter might brainstorm to paint when given the right tools and the opportunity enabled by the sail. The canvas theoretically signals that the behavior of painting will now be reinforced past positive consequences.

The figure provides a framework that you can use to understand almost whatever learned behavior y'all observe in yourself, your family, or your friends. If you would like to empathize it more deeply, consider taking a form on learning in the future, which volition give you a fuller appreciation of how classical learning, instrumental learning, addiction learning, and occasion setting really work and interact.

Observational Learning

Not all forms of learning are accounted for entirely by classical and operant conditioning. Imagine a child walking up to a group of children playing a game on the playground. The game looks fun, but it is new and unfamiliar. Rather than joining the game immediately, the child opts to sit down dorsum and watch the other children play a circular or two. Observing the others, the kid takes note of the ways in which they behave while playing the game. By watching the behavior of the other kids, the kid can figure out the rules of the game and fifty-fifty some strategies for doing well at the game. This is called observational learning.

Observational learning is a component of Albert Bandura's Social Learning Theory (Bandura, 1977), which posits that individuals can acquire novel responses via observation of key others' behaviors. Observational learning does non necessarily require reinforcement, only instead hinges on the presence of others, referred to equally social models. Social models are typically of higher status or potency compared to the observer, examples of which include parents, teachers, and law officers. In the instance above, the children who already know how to play the game could exist thought of as being authorities—and are therefore social models—even though they are the same historic period equally the observer. By observing how the social models conduct, an individual is able to acquire how to act in a sure situation. Other examples of observational learning might include a kid learning to place her napkin in her lap past watching her parents at the dinner tabular array, or a customer learning where to find the ketchup and mustard after observing other customers at a hot dog stand.

Bandura theorizes that the observational learning process consists of 4 parts. The showtime is attention—every bit, quite but, 1 must pay attention to what southward/he is observing in society to larn. The 2nd part is retentivity: to larn one must be able to retain the behavior s/he is observing in memory.The tertiary role of observational learning, initiation, acknowledges that the learner must be able to execute (or initiate) the learned behavior. Lastly, the observer must possess the motivation to engage in observational learning. In our vignette, the kid must want to learn how to play the game in gild to properly engage in observational learning.

Researchers have conducted countless experiments designed to explore observational learning, the near famous of which is Albert Bandura's "Bobo doll experiment."

In this experiment (Bandura, Ross & Ross 1961), Bandura had children individually observe an adult social model interact with a clown doll ("Bobo"). For one group of children, the developed interacted aggressively with Bobo: punching it, kicking it, throwing it, and fifty-fifty hitting it in the face up with a toy mallet. Another group of children watched the adult interact with other toys, displaying no aggression toward Bobo. In both instances the developed left and the children were immune to interact with Bobo on their own. Bandura found that children exposed to the ambitious social model were significantly more probable to behave aggressively toward Bobo, striking and boot him, compared to those exposed to the non-aggressive model. The researchers concluded that the children in the aggressive group used their observations of the adult social model's behavior to determine that aggressive beliefs toward Bobo was acceptable.

While reinforcement was not required to elicit the children's behavior in Bandura'south first experiment, it is important to acknowledge that consequences do play a part inside observational learning. A futurity adaptation of this study (Bandura, Ross, & Ross, 1963) demonstrated that children in the aggression group showed less aggressive behavior if they witnessed the adult model receive penalty for aggressing against Bobo. Bandura referred to this process as vicarious reinforcement, as the children did non experience the reinforcement or punishment directly, yet were still influenced by observing it.

Determination

Nosotros have covered three primary explanations for how we learn to behave and collaborate with the world around the states. Considering your own experiences, how well do these theories apply to y'all? Perhaps when reflecting on your personal sense of fashion, y'all realize that you tend to select clothes others have complimented y'all on (operant conditioning). Or maybe, thinking back on a new eating place yous tried recently, you realize you lot chose it because its commercials play happy music (classical workout). Or maybe y'all are at present ever on time with your assignments, considering you saw how others were punished when they were tardily (observational learning). Regardless of the activity, behavior, or response, at that place'due south a skillful take a chance your "decision" to exercise it tin can be explained based on 1 of the theories presented in this module.

Exterior Resource

- Article: Rescorla, R. A. (1988). Pavlovian conditioning: It'due south not what you think it is. American Psychologist, 43, 151–160.

- Book: Bouton, Thou. E. (2007). Learning and beliefs: A contemporary synthesis. Sunderland, MA: Sinauer Associates.

- Book: Bouton, Chiliad. E. (2009). Learning theory. In B. J. Sadock, V. A. Sadock, & P. Ruiz (Eds.), Kaplan & Sadock's comprehensive textbook of psychiatry (9th ed., Vol. 1, pp. 647–658). New York, NY: Lippincott Williams & Wilkins.

- Book: Domjan, Grand. (2010). The principles of learning and behavior (6th ed.). Belmont, CA: Wadsworth.

- Video: Albert Bandura discusses the Bobo Doll Experiment.

-

Discussion Questions

- Describe three examples of Pavlovian (classical) conditioning that you have seen in your own behavior, or that of your friends or family, in the by few days.

- Describe 3 examples of instrumental (operant) conditioning that you have seen in your own beliefs, or that of your friends or family, in the past few days.

- Drugs tin be potent reinforcers. Discuss how Pavlovian workout and instrumental workout can work together to influence drug taking.

- In the mod earth, candy foods are highly available and take been engineered to be highly palatable and reinforcing. Discuss how Pavlovian and instrumental conditioning can work together to explain why people ofttimes consume too much.

- How does blocking challenge the idea that pairings of a CS and US are sufficient to cause Pavlovian conditioning? What is important in creating Pavlovian learning?

- How does the reinforcer devaluation outcome challenge the idea that reinforcers merely "postage stamp in" the operant response? What does the upshot tell us that animals really learn in operant conditioning?

- With regards to social learning do you retrieve people learn violence from observing violence in movies? Why or why not?

- What do you think you lot take learned through social learning? Who are your social models?

Vocabulary

- Blocking

- In classical conditioning, the finding that no conditioning occurs to a stimulus if it is combined with a previously conditioned stimulus during conditioning trials. Suggests that information, surprise value, or prediction error is important in conditioning.

- Categorize

- To sort or arrange dissimilar items into classes or categories.

- Classical workout

- The procedure in which an initially neutral stimulus (the conditioned stimulus, or CS) is paired with an unconditioned stimulus (or U.s.). The result is that the conditioned stimulus begins to elicit a conditioned response (CR). Classical conditioning is nowadays considered important as both a behavioral phenomenon and equally a method to study simple associative learning. Aforementioned as Pavlovian conditioning.

- Conditioned compensatory response

- In classical workout, a conditioned response that opposes, rather than is the same as, the unconditioned response. It functions to reduce the strength of the unconditioned response. Oftentimes seen in conditioning when drugs are used equally unconditioned stimuli.

- Conditioned response (CR)

- The response that is elicited past the conditioned stimulus later classical workout has taken identify.

- Conditioned stimulus (CS)

- An initially neutral stimulus (similar a bell, lite, or tone) that elicits a conditioned response after it has been associated with an unconditioned stimulus.

- Context

- Stimuli that are in the background whenever learning occurs. For case, the Skinner box or room in which learning takes identify is the classic example of a context. However, "context" can too exist provided past internal stimuli, such as the sensory effects of drugs (e.thou., existence under the influence of alcohol has stimulus properties that provide a context) and mood states (e.g., being happy or sad). It tin also exist provided by a specific menses in time—the passage of time is sometimes said to change the "temporal context."

- Discriminative stimulus

- In operant workout, a stimulus that signals whether the response will be reinforced. It is said to "gear up the occasion" for the operant response.

- Extinction

- Decrease in the strength of a learned behavior that occurs when the conditioned stimulus is presented without the unconditioned stimulus (in classical conditioning) or when the behavior is no longer reinforced (in instrumental conditioning). The term describes both the procedure (the US or reinforcer is no longer presented) as well as the result of the process (the learned response declines). Behaviors that have been reduced in forcefulness through extinction are said to be "extinguished."

- Fear conditioning

- A type of classical or Pavlovian conditioning in which the conditioned stimulus (CS) is associated with an aversive unconditioned stimulus (US), such as a foot shock. As a consequence of learning, the CS comes to evoke fear. The phenomenon is thought to exist involved in the evolution of feet disorders in humans.

- Goal-directed behavior

- Instrumental behavior that is influenced by the creature's noesis of the association betwixt the behavior and its consequence and the current value of the consequence. Sensitive to the reinforcer devaluation effect.

- Habit

- Instrumental beliefs that occurs automatically in the presence of a stimulus and is no longer influenced by the animal'southward cognition of the value of the reinforcer. Insensitive to the reinforcer devaluation event.

- Instrumental workout

- Process in which animals learn virtually the relationship between their behaviors and their consequences. Besides known as operant conditioning.

- Law of upshot

- The idea that instrumental or operant responses are influenced by their effects. Responses that are followed by a pleasant land of diplomacy will exist strengthened and those that are followed past discomfort will be weakened. Nowadays, the term refers to the idea that operant or instrumental behaviors are lawfully controlled by their consequences.

- Observational learning

- Learning by observing the behavior of others.

- Operant

- A behavior that is controlled by its consequences. The simplest instance is the rat'due south lever-pressing, which is controlled by the presentation of the reinforcer.

- Operant conditioning

- See instrumental conditioning.

- Pavlovian conditioning

- Encounter classical workout.

- Prediction error

- When the outcome of a conditioning trial is different from that which is predicted by the conditioned stimuli that are present on the trial (i.e., when the U.s. is surprising). Prediction mistake is necessary to create Pavlovian conditioning (and associative learning by and large). Equally learning occurs over repeated conditioning trials, the conditioned stimulus increasingly predicts the unconditioned stimulus, and prediction mistake declines. Conditioning works to correct or reduce prediction error.

- Preparedness

- The thought that an organism's evolutionary history tin can brand it easy to learn a item association. Because of preparedness, you are more than probable to associate the taste of tequila, and non the circumstances surrounding drinking it, with getting sick. Similarly, humans are more likely to associate images of spiders and snakes than flowers and mushrooms with aversive outcomes like shocks.

- Punisher

- A stimulus that decreases the strength of an operant behavior when it is fabricated a upshot of the beliefs.

- Quantitative police force of effect

- A mathematical dominion that states that the effectiveness of a reinforcer at strengthening an operant response depends on the amount of reinforcement earned for all alternative behaviors. A reinforcer is less effective if at that place is a lot of reinforcement in the surroundings for other behaviors.

- Reinforcer

- Any effect of a behavior that strengthens the beliefs or increases the likelihood that information technology will be performed information technology again.

- Reinforcer devaluation effect

- The finding that an animal will stop performing an instrumental response that once led to a reinforcer if the reinforcer is separately fabricated aversive or undesirable.

- Renewal event

- Recovery of an extinguished response that occurs when the context is inverse after extinction. Especially strong when the modify of context involves render to the context in which conditioning originally occurred. Can occur subsequently extinction in either classical or instrumental conditioning.

- The theory that people can learn new responses and behaviors by observing the behavior of others.

- Authorities that are the targets for ascertainment and who model behaviors.

- Spontaneous recovery

- Recovery of an extinguished response that occurs with the passage of time after extinction. Tin occur after extinction in either classical or instrumental workout.

- Stimulus command

- When an operant behavior is controlled by a stimulus that precedes it.

- Taste aversion learning

- The miracle in which a sense of taste is paired with sickness, and this causes the organism to reject—and dislike—that gustation in the time to come.

- Unconditioned response (UR)

- In classical conditioning, an innate response that is elicited by a stimulus before (or in the absence of) conditioning.

- Unconditioned stimulus (U.s.a.)

- In classical workout, the stimulus that elicits the response earlier conditioning occurs.

- Vicarious reinforcement

- Learning that occurs by observing the reinforcement or punishment of another person.

References

- Balleine, B. W. (2005). Neural basis of nutrient-seeking: Affect, arousal, and advantage in corticostratolimbic circuits. Physiology & Behavior, 86, 717–730.

- Bandura, A. (1977). Social learning theory. Englewood Cliffs, NJ: Prentice Hall

- Bandura, A., Ross, D., Ross, S (1963). Imitation of motion picture-mediated aggressive models. Journal of Aberrant and Social Psychology 66(1), iii - xi.

- Bandura, A.; Ross, D.; Ross, S. A. (1961). "Transmission of aggression through the fake of aggressive models". Periodical of Abnormal and Social Psychology 63(3), 575–582.

- Bernstein, I. L. (1991). Aversion conditioning in response to cancer and cancer treatment. Clinical Psychology Review, 11, 185–191.

- Bouton, M. E. (2004). Context and behavioral processes in extinction. Learning & Memory, 11, 485–494.

- Colwill, R. One thousand., & Rescorla, R. A. (1986). Associative structures in instrumental learning. In G. H. Bower (Ed.), The psychology of learning and motivation, (Vol. 20, pp. 55–104). New York, NY: Academic Press.

- Craske, One thousand. Yard., Kircanski, G., Zelikowsky, M., Mystkowski, J., Chowdhury, N., & Baker, A. (2008). Optimizing inhibitory learning during exposure therapy. Behaviour Research and Therapy, 46, 5–27.

- Dickinson, A., & Balleine, B. Due west. (1994). Motivational control of goal-directed behavior. Animal Learning & Behavior, 22, i–18.

- Fanselow, Chiliad. S., & Poulos, A. M. (2005). The neuroscience of mammalian associative learning. Annual Review of Psychology, 56, 207–234.

- Herrnstein, R. J. (1970). On the police force of result. Journal of the Experimental Analysis of Behavior, thirteen, 243–266.

- The netherlands, P. C. (2004). Relations between Pavlovian-instrumental transfer and reinforcer devaluation. Journal of Experimental Psychology: Animal Behavior Processes, 30, 104–117.

- Kamin, L. J. (1969). Predictability, surprise, attention, and conditioning. In B. A. Campbell & R. M. Church (Eds.), Penalization and aversive behavior (pp. 279–296). New York, NY: Appleton-Century-Crofts.

- Mineka, Due south., & Zinbarg, R. (2006). A gimmicky learning theory perspective on the etiology of anxiety disorders: It'due south not what you thought it was. American Psychologist, 61, x–26.

- Pearce, J. M., & Bouton, M. E. (2001). Theories of associative learning in animals. Annual Review of Psychology, 52, 111–139.

- Rescorla, R. A., & Wagner, A. R. (1972). A theory of Pavlovian conditioning: Variations in the effectiveness of reinforcement and nonreinforcement. In A. H. Black & W. F. Prokasy (Eds.), Classical conditioning II: Current research and theory (pp. 64–99). New York, NY: Appleton-Century-Crofts.

- Scalera, G., & Bavieri, M. (2009). Role of conditioned taste aversion on the side effects of chemotherapy in cancer patients. In South. Reilly & T. R. Schachtman (Eds.), Conditioned taste aversion: Behavioral and neural processes (pp. 513–541). New York, NY: Oxford University Press.

- Siegel, Due south. (1989). Pharmacological conditioning and drug effects. In A. J. Goudie & M. Emmett-Oglesby (Eds.), Psychoactive drugs (pp. 115–180). Clifton, NY: Humana Press.

- Siegel, S., Hinson, R. East., Krank, M. D., & McCully, J. (1982). Heroin "overdose" death: Contribution of drug associated ecology cues. Science, 216, 436–437.

- Spreat, S., & Spreat, S. R. (1982). Learning principles. In V. Voith & P. L. Borchelt (Eds.), Veterinarian clinics of North America: Small animal practice (pp. 593–606). Philadelphia, PA: Due west. B. Saunders.

- Thompson, R. F., & Steinmetz, J. E. (2009). The role of the cerebellum in classical conditioningof discrete behavioral responses. Neuroscience, 162, 732–755.

- Timberlake, W. L. (2001). Motivational modes in behavior systems. In R. R. Mowrer & S. B. Klein (Eds.), Handbook of gimmicky learning theories (pp. 155–210). Mahwah, NJ: Lawrence Erlbaum Assembly, Inc.

- Wasserman, E. A. (1995). The conceptual abilities of pigeons. American Scientist, 83, 246–255.

Authors

Creative Commons License

Conditioning and Learning by Mark E. Bouton is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike iv.0 International License. Permissions beyond the scope of this license may be available in our Licensing Agreement.

Conditioning and Learning by Mark E. Bouton is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike iv.0 International License. Permissions beyond the scope of this license may be available in our Licensing Agreement. How to cite this Noba module using APA Style

Bouton, Thousand. East. (2022). Conditioning and learning. In R. Biswas-Diener & E. Diener (Eds), Noba textbook series: Psychology. Champaign, IL: DEF publishers. Retrieved from http://noba.to/ajxhcqdrSource: https://nobaproject.com/modules/conditioning-and-learning

0 Response to "Reinforcement ______ the Likelihood of a Behavior Reoccurring Again in the Future."

Post a Comment